About Me

Hello, I’m Meiyun Wang. Currently, I am a 2nd-year PhD student in the Department of Systems Innovation, School of Engineering, at The University of Tokyo.

💡 Research Focus

My research focus is on explainable, trustworthy, and applicable AI.

🔄 Ongoing Project

Causal Reasoning of Large Language Models: Explore the causal reasoning capabilities of LLMs using synthetic data.

Large Language Models as Recommendation Systems: Leveraging LLMs for recommendation tasks with a focus on numerical data.

📚 Previous Projects

1. Mitigating Hallucinations in Large Language Models: Training privacy-sensitive student models using synthetic data generated by large language models.

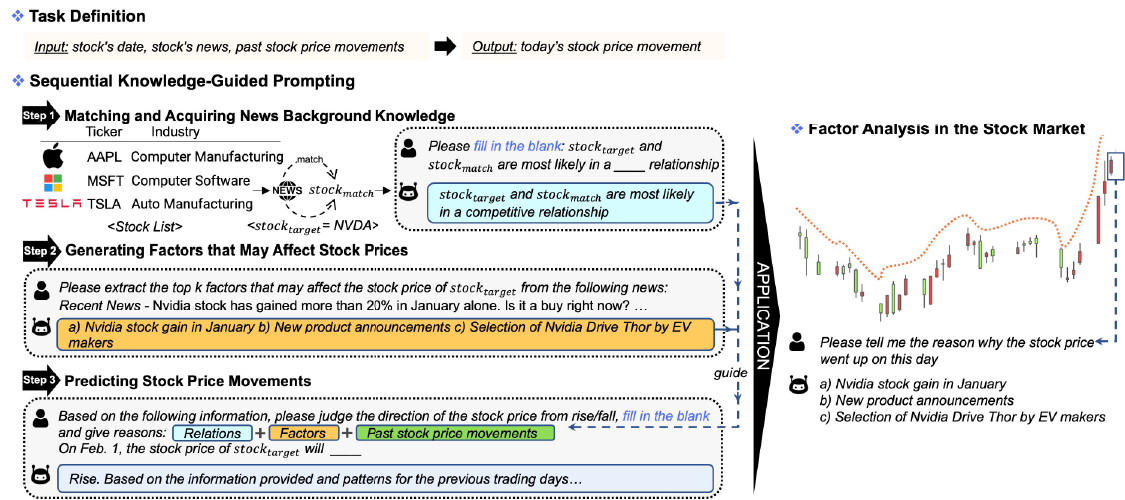

2. Factor Extraction for Time Series Data Explanation Using Large Language Models: Utilizing large language models to extract factors and make time series forecasting.

3. Risk Prediction by Combining Graph Neural Networks and Temporal Recurrent Neural Networks: Integrating graph neural networks and LSTM for accurate risk prediction.

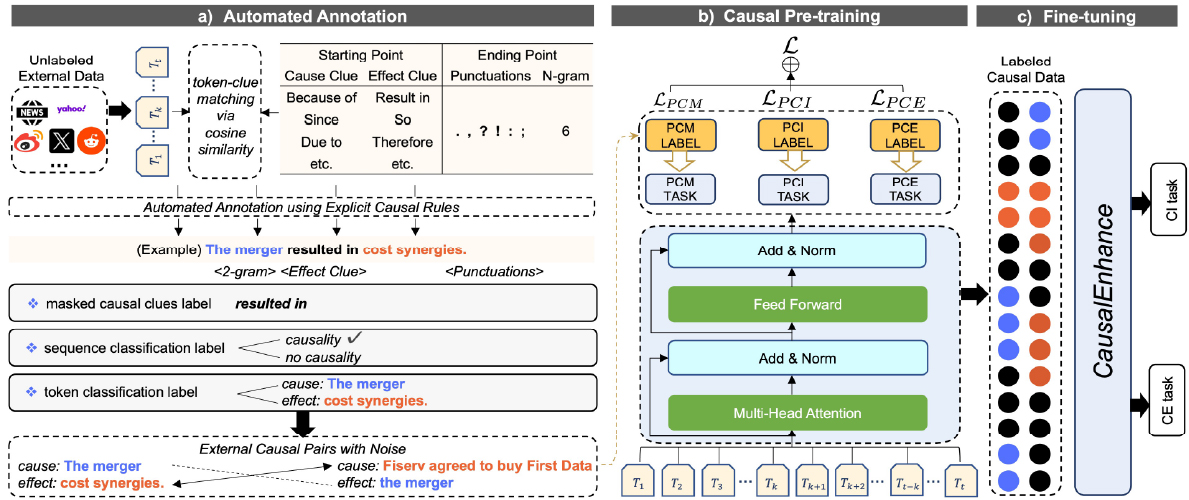

4. Causality-Oriented Pre-Training Framework and Data Augmentation Methods: Developing a pre-training framework and data augmentation methods focused on causality extraction.

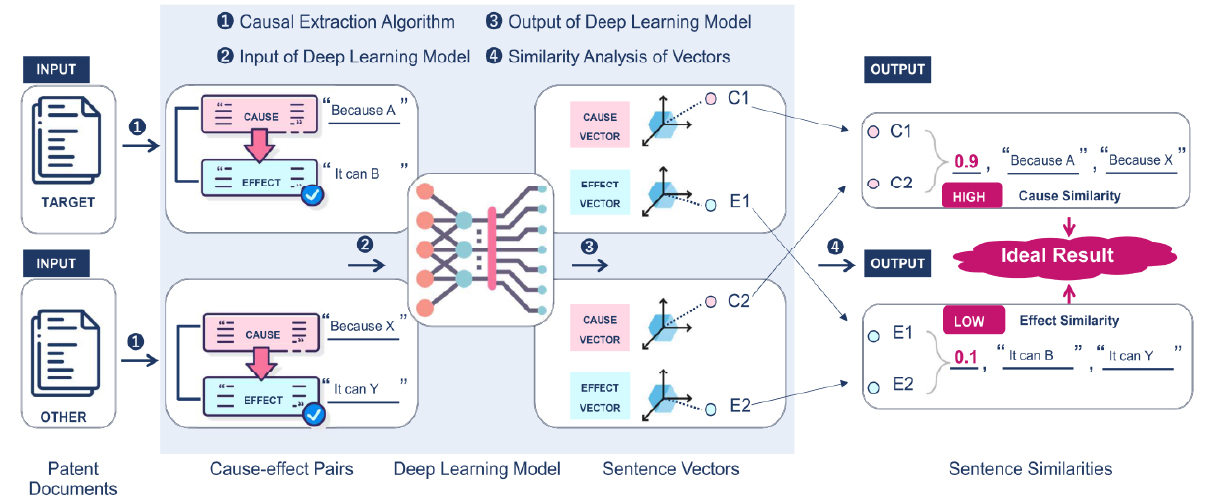

5. Application Exploration of Causality Extraction in Patent Texts: Exploring the application of causality extraction techniques in patent texts.

🔥 News

- 2024.08: One new paper on arXiv: Interactive DualChecker for Mitigating Hallucinations in Distilling Large Language Models

- 2024.07: I will join Amazon Science as a Data Scientist Fellow working on applying large language models (LLMs) to customer recommendation problems this summer.

- 2024.05: 🎉 One paper is accepted by ACL 2024 (first author)

📝 Publications (first author)

[ACL 2024] LLMFactor

| LLMFactor: Extracting Profitable Factors through Prompts for Explainable Stock Movement Prediction

|

[preprint] CausalEnhance

| CausalEnhance: Knowledge-Enhanced Pre-training for Causality Identification and Extraction

|

[WPI 2023] PatentCausality

| Discovering new applications: Cross-domain exploration of patent documents using causal extraction and similarity analysis

|

- [JSAI 2023]: New Intellectual Property Management Method Aiming at Expanding Technology Applications and Secondary Development, Meiyun Wang, et al, The 37th Annual Conference of the Japanese Society for Artificial Intelligence, 2023.

- [ICAIF 2022]: A New Approach to Assessing Corporate R&D Capabilities: Exploring Patent Value Based on Machine Learning, Meiyun Wang, et al, 3rd Workshop on Women in AI and Finance, 3rd ACM International Conference on AI in Finance, New York, USA, Nov 2nd, 2022.

🎖 Honors

- 2024 ~ 2025: Fostering Advanced Human Resources to Lead Green Transformation (SPRING GX), The University of Tokyo.

- 2023 ~ 2025: World-leading Innovative Graduate Study Program on Global Leadership for Social Design and Management (WINGS-GSDM), The University of Tokyo.

- 2023 ~ 2025: Data Science Practicum, The University of Tokyo.

- 2023 ~ 2025: Designing Future Society Fellowship, The University of Tokyo.

💻 Internships

- 2024.08 - 2024.11: Amazon Data Scientist Fellow

- 2023.11 - 2024.01: Mizuho, Tokyo.

- 2022.04 - 2022.07: Google STEP, Tokyo.

- 2020.11 - 2021.08: Tencent, Shenzhen.

- 2020.08 - 2020.11: PingAn, Shenzhen.